What is a framework that helps us conceptualize how technology is used to enhance teaching and learning called? ✅ [Update]

Mẹo Hướng dẫn What is a framework that helps us conceptualize how technology is used to enhance teaching and learning called? 2022

Bùi Nhật Dương đang tìm kiếm từ khóa What is a framework that helps us conceptualize how technology is used to enhance teaching and learning called? được Cập Nhật vào lúc : 2022-09-27 22:24:05 . Với phương châm chia sẻ Kinh Nghiệm về trong nội dung bài viết một cách Chi Tiết 2022. Nếu sau khi Read nội dung bài viết vẫn ko hiểu thì hoàn toàn có thể lại Comment ở cuối bài để Mình lý giải và hướng dẫn lại nha.Teaching technology integration requires teacher educators to grapple with (a) constantly changing, politically impacted professional requirements, (b) continuously evolving educational technology resources, and (c) varying needs across content disciplines and contexts. Teacher educators cannot foresee how their students may be expected to use educational technologies in the future or how technologies will change during their careers. Therefore, training student teachers to practice technology integration in meaningful, effective, and sustainable ways is a daunting challenge. We propose PICRAT, a theoretical model for responding to this need.

Nội dung chính- Theoretical

ModelsModel Purposes and ComponentsEmergence of Technology Integration ModelsA Good Model for Teaching Technology IntegrationWeaknesses of Existing Technology Integration ModelsPIC: Passive, Interactive, CreativeRAT: Replacement, Amplification,

TransformationPICRAT MatrixBenefits of PICRATLimitations or Difficulties of PICRATWhat is the framework for technology integration?Why must teachers be equipped with Tpack in teaching social studies?In what ways can using graphing calculators aid students in understanding mathematical concepts?What is the most significant benefit of instituting distance education within a school district quizlet?

Currently, various theoretical models are used to help student teachers conceptualize effective technology integration, including Technology, Pedagogy, and Content Knowledge (TPACK; Koehler & Mishra, 2009), Substitution – Augmentation – Modification – Redefinition (SAMR; Puentedura, 2003), Technology Integration Planning (TIP; Roblyer & Doering, 2013), Technology Integration Matrix (TIM; Harmes, Welsh, & Winkelman, 2022), Technology Acceptance Model (TAM; Venkatesh, Morris, Davis, & Davis, 2003), Levels of Technology Integration (LoTi; Moersch, 1995), and Replacement – Amplification – Transformation (RAT; Hughes, Thomas, & Scharber, 2006).

Though these models are commonly referenced throughout the literature to justify methodological approaches for studying educational technology, little theoretical criticism and minimal evaluative work can be found to gauge their efficacy, accuracy, or value, either for improving educational technology research or for teaching technology integration (Kimmons, 2015; Kimmons & Hall, 2022). Relatively few researchers have devoted effort to critically evaluating these models, categorizing and comparing them, supporting their ongoing development, understanding assumptions and processes for adopting them, or exploring what constitutes good theory in this realm (Archambault & Barnett, 2010; Archambault & Crippen, 2009; Brantley-Dias & Ertmer, 2013; Graham, 2011; Graham, Henrie, & Gibbons, 2014; Kimmons, 2015; Kimmons & Hall, 2016a, 2016b, 2022).

In other words, educational technologists seem to be heavily involved in what Kuhn (1996) considered “normal science” without critically evaluating competing models, understanding their use, and exploring their development over time. Reticence to engage in critical discourse about theory and realities that shape practical technology integration has serious implications for practice, leading to what Selwyn (2010) described as “an obvious disparity between rhetoric and reality [that] runs throughout much of the past 25 years of educational technology scholarship” (p. 66), leaving promises of educational technologies relatively unrealized.

Needing a critical discussion of extant models and theoretical underpinnings of practice, we provide a conceptual framework, including (a) what theoretical models are and why we need them for teaching technology integration, (b) how they are adopted and developed over time, (c) what makes them good or bad, and (d) how existing models of technology integration cause struggle in teacher preparation. With this backdrop, we propose a new theoretical model, PICRAT, built on the previous work of Hughes et al. (2006), which can guide student teachers in developing technology integration literacies.

Theoretical Models

Authors frequently use terms such as model, theory, paradigm, and framework interchangeably (e.g., paradeigma is Greek for pattern, illustration, or model; cf. Dubin, 1978; Graham et al., 2014; Kimmons & Hall, 2016a; Kimmons & Johnstun, 2022; Whetten, 1989). However, we rely on the term theoretical model for technology integration models, as it encapsulates the conceptual, organizational, and reflective nature of constructs we discuss.

Model Purposes and Components

A theoretical model conceptually represents phenomena, allowing individuals to organize and understand their experiences, both individually and interactively. All disciplines in hard and social sciences utilize theoretical models, and professionals use these models to make sense of natural and social worlds that are inherently unordered, complex, and messy. Summarizing Dubin’s (1978) substantial work on theory development, Whetten (1989) explained four essential elements for all theoretical models: the what, how, why, and who/where/when. First, models must include sufficient variables, constructs, concepts, and details explaining the what of studied phenomena to make the theories comprehensive but sufficiently limited to allow for parsimony and to prevent overreaching.

Second, models must address how components are interrelated: the categorization or structure of the model allowing theorists to make sense of the world in novel ways. Third, models must provide logic and rationale to support why components are related in the proposed form. Herein the model’s assumptions generally linger (explicitly or implicitly); its argumentative strength relies on the theorist’s ability to make a strong case that it is reasonable.

Fourth, models must be bounded by a context representing the who, where, and when of its application. Models are not theories of everything; by bounding the model to a specific context (e.g., U.S. teacher education), theorists can increase purity and more readily respond to critics (Dubin, 1978).

Emergence of Technology Integration Models

Many teacher educators adopt technology integration models in anarchic ways or according to camps (Feyerabend, 1975; Kimmons, 2015; Kimmons & Hall, 2016a; Kimmons & Johnstun, 2022). That is, they use models enculturated to them via their own training without justification or comparison of competing models. Literature reflects these camps, as instruments are built and studies are framed without comparison of models or rationales for choice (cf. Kimmons, 2015b). Each camp speaks its own language (TPACK, TIM, TAM, SAMR, LoTi, etc.), neither recognizing other camps nor acknowledging relationships to them.

Whether this disconnect results from theoretical incommensurability or opportunism (cf. Feyerabend, 1975; Kuhn, 1996), we advise theoretical pluralism: “that various models are appropriate and valuable in different contexts” (Kimmons & Hall, 2016a, p. 54; Kimmons & Johnstun, 2022). Thus, we do not perceive a need to conduct “paradigm wars that seek to establish a single theoretical perspective or methodology as superior,” considering such to be an “unproductive disputation” (Burkhardt & Schoenfeld, 2003, p. 9).

We contend, however, that the field’s ongoing adoption of theoretical models with little discussion of their affordances, limitations, contradictions, and relationships to others is of serious concern, because “no [model] ever solves all the problems it defines,” and “no two [models] leave all the same problems unsolved” (Kuhn, 1996, p. 110). The difficulty with theoretical camps in this field is not pluralism but absence of mutual understanding and meaningful cross-communication among camps, along with the failure to weigh the advantages and disadvantages of competing theories, revealing that educators do not take them seriously (Willingham, 2012). Unwillingness to dialogue across camps or to evaluate critically the underlying theories shaping diverse camps leads to professional siloing and prevents our field from effectively grappling with the multifaceted complexities of technology integration in teaching.

A Good Model for Teaching Technology Integration

Kuhn (2013) argued for a structure to model adoption, with core characteristics identifying certain theoretical models as superior. These characteristics vary somewhat by field and context of application; theoretical models in this field serve different purposes than do models in the hard sciences, and teacher educators will utilize models differently than will educational researchers or technologists (Gibbons & Bunderson, 2005).

Kimmons and Hall (2016a) said, “Determinations of [a model’s] value are not purely arbitrary but are rather based in structured value systems representing the beliefs, needs, desires, and intents of adoptees” in a particular context (p. 55). Six criteria have been proposed for determining quality of teacher education technology integration models: (a) clarity, (b) compatibility, (c) student focus, (d) fruitfulness, (e) technology role, and (f) scope (see Table 1).

Table 1

Six Criteria and Guiding Questions for Evaluating Technology Integration Models for Student Teachers

First, technology integration models should be “simple and easy to understand conceptually and in practice” (Kimmons & Hall, 2016a, pp. 61–62), eschewing explanations and constructs that invite confusion and “hidden complexity” (Graham, 2011, p. 1955; Kimmons, 2015). Ideally, a model is concise enough to be quickly explained to teachers and easily applied in their practice — intuitive, practical, and easy to value. Models requiring lengthy explanation, introducing too many constructs, or diving into issues not central to teachers’ everyday needs should be reevaluated, simplified, or avoided.

Second, compatibility (i.e., alignment) with “existing educational and pedagogical practices” (Kimmons & Hall, 2016a, p. 55) is important. Teachers want practical models that help them address everyday classroom issues with limited conceptual overhead. We concluded the following in an earlier empirical study:

Teachers find themselves in a world driven by external requirements for their own performance and the performance of their students, and broad, theoretical discussions about how technology is transforming the educational system are not very helpful…. The typical teacher seems to be most concerned with addressing the needs of the local students under their care in the manner prescribed to them by their institutions. (Kimmons & Hall, 2016b, p. 23)

Thus, technology integration models should emphasize “discernible impact and realistic access to technologies” (p. 24) rather than broad concepts (e.g., social change) or unrealistic technological requirements (e.g., 1:1 teacher–device ratios in poor communities).

Third, fruitful models should encourage adoption among “a diversity of users for diverse purposes and yield valuable results crossing disciplines and traditional silos of practice” (Kimmons & Hall, 2016a, p. 58). We intend that technology integration models used to teach teachers should elicit fruitful thinking: yielding connections and thoughtful lines of questioning, expanding across multiple areas of practice in ways that would not have occurred without the model, and yielding insights beyond the initial scope of the model’s implementation.

Fourth, technology’s role should serve as a means to an end, not an end in itself — avoiding technocentric thinking (Papert, 1987, 1990). Though referred to as technology integration models, their goal should go beyond integration to emphasize improved pedagogy or learning. The model should not merely guide educators in using technology without a foundation for justifying its use. This means-oriented view should place technology as one of many factors to influence desired outcomes.

Fifth, suitable scope is necessary for guiding practitioners in the what, how, and why of technology integration. While being compatible with existing practices, models should also influence teachers in better-informed choices about technology use. As Burkhardt and Schoenfeld (2003) explained,

Most of the theories that have been applied to education are quite broad. They lack what might be called “engineering power” … [or] the specificity that helps to guide design, to take good ideas and make sure that they work in practice. … Education lags far behind [other fields] in the range and reliability of its theories. By overestimating theories’ strength … damage has been done. … Local or phenomenological theories … are currently more valuable in design. (p. 10)

In this way “scope and compatibility may seem odds … models that excel in compatibility may be perceived as supporting the status quo, while models with global scope may be perceived as supporting sweeping change” (Kimmons & Hall, 2016a, p. 57). However, a good model balances comprehensiveness and parsimony (Dubin, 1978), both guiding teachers practically and prompting them conceptually in critically evaluating their practice against a larger backdrop of social and educational problems. Any such model should seek to apply to all education professionals broadly while fixating on a “population of exactly one” (p. 137). In our context, a model’s scope should focus squarely on student teachers, with possible applicability to practicing teachers and others as well.

Finally, student focus is vital for a technology integration model. As Willingham (2012) explained, “changes in the educational system are irrelevant if they don’t ultimately lead to changes in student thought” (p. 155). Too often the literature surrounding technology integration ignores students in favor of teacher- or activity-centered analyses of practice: perhaps the technology–pedagogy relationship or video as lesson enhancement. “Though some models may allude to student outcomes, they may not give these outcomes … [primacy] in the technology integration process” (Kimmons & Hall, 2016a, p. 61), which may signal to teachers that student considerations are not of primary importance.

Weaknesses of Existing Technology Integration Models

Each of the most popular technology integration models has strengths and weaknesses. To justify the need for a model better suited for the field, we summarize in this section the major limitations or difficulties inherent to seven existing models — LoTi, RAT, SAMR, TAM, TIM, TIP, and TPACK — in the context of guiding technology integration for student teachers. This brief summary will not do justice to the benefits of each of these models. Additional detail may be obtained from the previously referenced publications, including Kimmons and Hall (2016a).

We may be critiqued for providing strawman arguments against models or ignoring their affordances, but we have chosen merely to suggest that these might be areas where each of these models may have limitations for teacher education. Several of these areas have been explored in prior literature, whereas others are drawn from our own experiences as teacher educators in the technology integration space, briefly summarized in Table 2. Additionally, these critiques may not apply in other education-related contexts (e.g., educational administration or instructional design) and are squarely focused on teacher education. Even though we do not provide ironclad arguments or evidence for each claim listed in the subsequent section (doing so would require multiple studies and book-length treatment), voicing these frustrations is necessary for proceeding and for articulating a gap in this professional space. In sum, our goal is not to convince anyone that each enumerated difficulty is incontrovertibly true but merely to provide transparency about our own reasoning and experiences.

Table 2

Difficulties Using Prominent Models in Teacher Education

Student Focus: Students are implied in pedagogy but are not central.

Hughes, Thomas, & Scharber (2006) SAMR Clarity: Level boundaries are unclear (e.g., substitution vs. augmentation).Fruitfulness: Level distinctions may not be meaningful for practitioners.

Student Focus: Student activities are implied each level but are not explicit or inherent in each level’s definition.

Puentedura (2003) TAM Compatibility: Not education- or learning-focused but is rather focused on user perceptions of technology usefulness (i.e., researcher or administrator focus).Fruitfulness: Emphasis on user perceptions and adoption yields little value for teachers.

Role of Technology: Technology adoption is the goal.

Scope: Not parsimonious enough to focus on educators and students, but also not comprehensive enough to account for pedagogy, et cetera.

Student Focus: Students are not included or implied (teacher use only).

Venkatesh, Morris, Davis, & Davis (2003) TIM Clarity: Levels are not mutually exclusive (e.g., the same experience may be collaborative, constructive, and authentic) and potentially unintuitive.Fruitfulness: Too many levels are provided (25 scenarios between two axes), and levels may not be hierarchical (e.g., infusion vs. adaptation).

Scope: May not be sufficiently parsimonious for teacher self-improvement and focuses on overall teacher development (e.g., extensive use) rather than specific instances.

Harmes, Welsh, & Winkelman (2022) TIP Clarity: Determining relative advantage is a precursor to the model but is itself not adequately modeled.Scope: May ignore other important aspects of practice beyond lesson planning and may overcomplicate the process.

Student Focus: Students are implied in learning objectives and relative advantage but are not central.

Roblyer & Doering (2013)(Note: The updated version of this model TTIPP was not reviewed for this paper; Roblyer & Hughes, 2022.)

TPACK Clarity: Boundaries are fuzzy, and hidden complexities seem to exist.Compatibility: Does not explicitly guide useful classroom practices (e.g., lesson planning).

Fruitfulness: Distinctions may not be empirically verifiable or hierarchical (e.g., TPACK vs. PCK).

Scope: May be too comprehensive for teachers (i.e., lacks parsimony for their context).

Koehler & Mishra (2009)Mishra & Koehler (2007)

Clarity. Many technology integration models are unclear for teachers, being overly theoretical, deceptive, unintuitive, or confusing. For instance, SAMR, TIM, and TPACK provide a variety of levels (classifications) of integration but may not clearly define them or distinguish them from other levels. Student teachers, thus, may have difficulty understanding them or may artificially classify practices in inaccurate or useless ways. Most models include specific concepts that may be difficult for teachers to comprehend (e.g., the bullseye area in TPACK, relative advantage in TIP, substitution vs. augmentation in SAMR, or transformation in TIM and RAT), leading to superficial understanding of complex issues or to unsophisticated rationales for relatively shallow technology use.

Recognizing the contextual complexities of technology integration, Mishra and Koehler (2007) argued that every instance of integration is a “wicked problem.” Although this characterization may be accurate, teachers need models to guide them in grappling clearly and intuitively with such complexities.

Compatibility. Models we consider incompatible for K–12 teachers emphasize constructs that are impractical or not central to a teacher’s daily needs. TAM, for instance, focuses entirely on user perceptions influencing adoption, with little application for developing lesson plans, guiding student learning, or managing classroom behaviors. Even models developed for educators may focus on activities incompatible with teacher needs (e.g., student activism in LoTi) or be too theoretical to apply directly to teacher practice (e.g., technological content knowledge in TPACK).

Fruitfulness. Models that lack fruitfulness do not lead teachers to meaningful reflection, but rather yield unmeaningful evaluations of practice. Models with multiple levels of integration, such as LoTi, SAMR, and TIM, need a purpose for classifying practice each level; teachers must understand why classifying practice as augmentation versus modification (SAMR) or as awareness versus exploration (LoTi) is meaningful. SAMR has four levels of integration, LoTi has six, and TIM has 25 across two axes (5×5). For teachers, too many possibilities, particularly if nonhierarchical, can make a model confusing and cumbersome if the goal in their context is to help them quickly reflect on their practice and improve as needed.

Technology role. Some models are technocentric: focused on technology use as the goal rather than as a means to an educational result. TAM, for instance, particularly focuses only on technology adoption, not on improving teaching and learning. Other models may focus on improving practice but be largely technodeterministic in their view of technology as improving practice rather than creating a space for effective pedagogies to emerge.

Scope. Models with poor scope do not balance effectively between comprehensiveness and parsimony, being either too directive or too broad for meaningful application. TIP, for instance, is overly directive, simulating an instructional design approach to creating TPACK-based lesson plans but too narrowly focused to go beyond this. In contrast, the much broader TPACK provides teachers with a conceptual framework for synchronizing component parts but without concrete guidance on putting it into practice. To be useful, models should ignore aspects of technology integration not readily applicable for teachers, but provide sufficient comprehensiveness to guide practice.

Student focus.Most models of technology integration do not meaningfully focus on students, focusing on technology-adoption or teacher-pedagogy goals rather than clarity on what students do or learn. Models may merely assume student presence with pedagogical considerations, but failure to consider students the center of practitioner models prevents alignment with student-focused practitioners’ needs.

Summary of theoretical models. Many of these models were initially developed for broader audiences and retroactively applied to preparing teachers. Others were developed for teacher pedagogical practices a conceptual level without providing sufficient guidance on actual implementation. Although many models benefit education professionals, a theoretical model for teacher education is needed that (a) is clear, compatible, and fruitful; (b) emphasizes technology use as a means to an end; (c) balances parsimony and comprehensiveness; and (d) focuses on students. We view theoretical models in education opportunistically (à la Feyerabend, 1975). Rather than seeking one model for all contexts and considerations, we recognize a need to provide teachers with a model that is most useful for their concrete practice. While these other models have a place (e.g., TPACK is great for conceptualizing how to embed technology an administrative level across courses), something is needed with better-tuned “engineering power” for teacher education (Burkhardt & Schoenfeld, 2003, p. 10).

As a theoretical model to guide teacher technology integration, PICRAT enables teacher educators to encourage reflection, prescriptively guide practice, and evaluate student teacher work. Any theoretical model will explain particular attributes well and neglect others, but PICRAT is a student-focused, pedagogy-driven model that can be effective for the specific context of teacher education —comprehensible and usable by teachers as it guides the most worthwhile considerations for technology integration.

We began developing this model by considering the two most important questions a teacher should reflect on and evaluate when using technology in teaching, considering time constraints, training limitations, and their emic perspective on their own teaching. Based on research emphasizing the need for models to focus on students (Wentworth et al., 2009; Wentworth, Graham, & Tripp, 2008), our first question was, “What are students doing with the technology?” Recognizing the importance of teachers’ reflection on their pedagogical practices, our second question was, “How does this use of technology impact the teacher’s pedagogy?”

Teachers’ answers to these questions on a three-level response metric comprise what we call PICRAT. PIC refers to the three options associated with the first question (passive, interactive, and creative); and RAT represents the three options for the second (replacement, amplification, and transformation).

PIC: Passive, Interactive, Creative

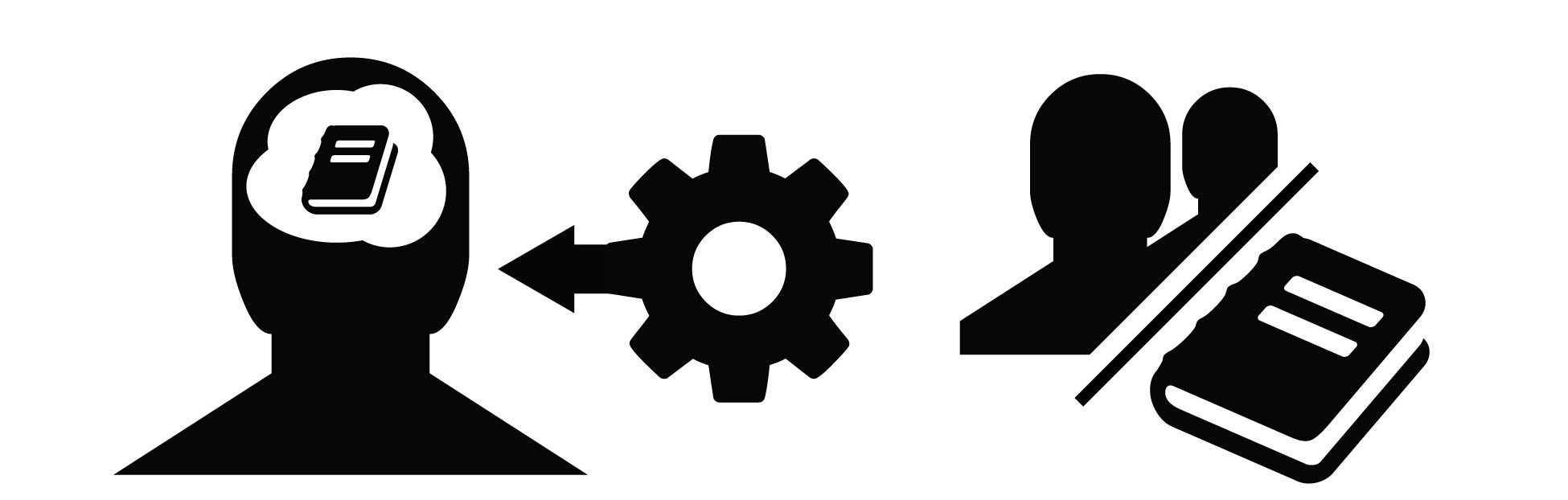

First, we emphasize three basic student roles in using technology: passive learning (receiving content passively), interactive learning (interacting with content and/or other learners), and creative learning (constructing knowledge via the construction of artifacts; Papert & Harel, 1991). Teachers have traditionally incorporated technologies offering students knowledge as passive recipients (Cuban, 1986). Converting lecture notes to PowerPoint slides or showing YouTube videos uses technology for instruction that students passively observe or listen to rather than engaging with as active participants (Figure 1).

Figure 1. The passive level of student learning in PIC.

Figure 1. The passive level of student learning in PIC. Listening, observing, and reading are essential but not sufficient learning skills. Our experiences have shown that most teachers who begin utilizing technology to support instruction work from a passive level, and they must be explicitly guided to move beyond this first step.

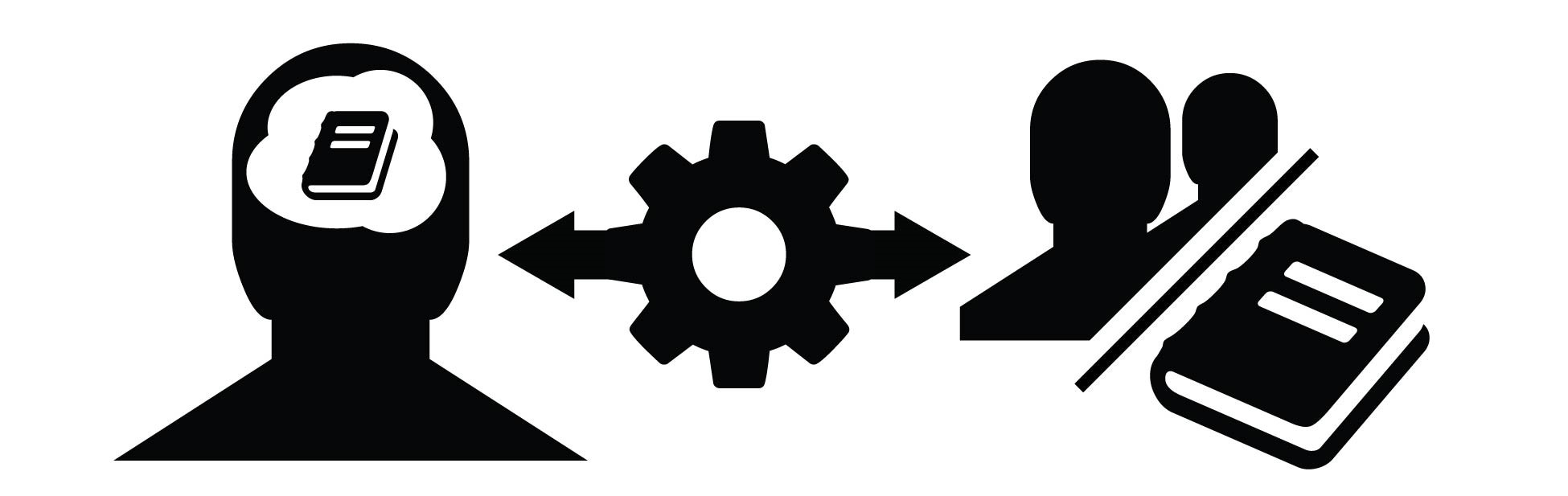

Much lasting and impactful learning occurs only when students are interactively engaged through exploration, experimentation, collaboration, and other active behaviors (Kennewell, Tanner, Jones, & Beauchamp, 2008). Through technology this learning may involve playing games, taking computerized adaptive tests, manipulating simulations, or using digital flash cards to support recall. This interactive level of student use is fundamentally different from passive uses, as students are directly interacting with the technology (or with other learners through the technology), and their learning is mediated by that interaction (Figure 2).

Figure 2. The interactive level of student learning in PIC.

Figure 2. The interactive level of student learning in PIC. This level may require certain affordances of the technology, but potential for interaction is not the same as interactive learning. Learning must occur due to the interactivity; the existence of interactive features is not sufficient. An educational trò chơi might require students to solve a problem before showing the optimal solution or providing additional content, which means that students must interact with the trò chơi by making choices, solving problems, and responding to feedback, thereby actively directing aspects of their own learning. The interactive level is still limited, however. Despite recursive interaction with the technology, learning is largely structured by the technology rather than by the student, which may limit transferability and meaningful connections to previous learning.

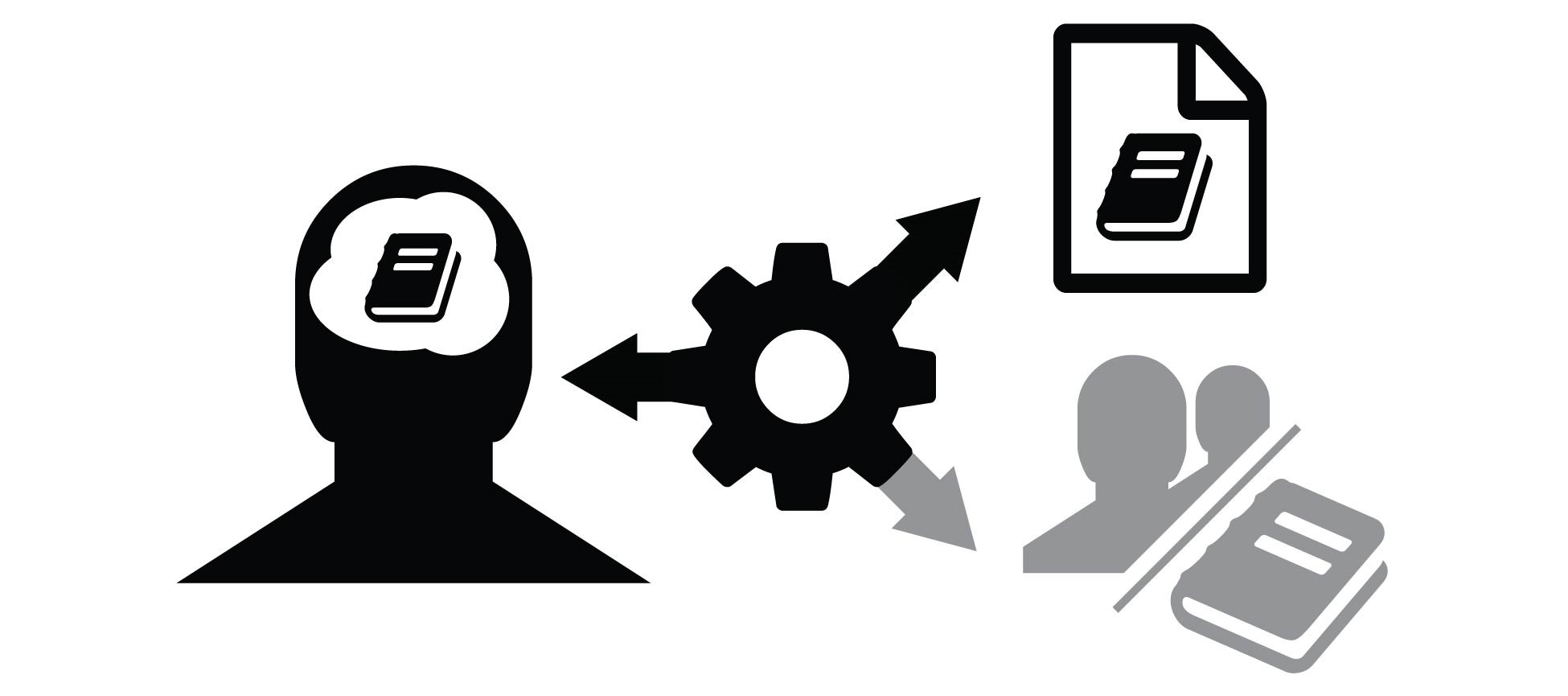

The creative level of student technology use bypasses this limitation by having students use the technology as a platform to construct learning artifacts that instantiate learning mastery. Lasting, meaningful learning occurs best as students apply concepts and skills by constructing real-world or digital artifacts to solve problems (Papert & Harel, 1991), aligning with the highest level of Bloom’s revised taxonomy of learning (Anderson, Krathwohl, & Bloom, 2001).

Technology construction platforms may include authoring tools, coding, video editing, sound mixing, and presentation creation, allowing students to give form to their developing knowledge (Figure 3). In learning the fundamentals of coding, students might create a program that moves an avatar from Point A to Point B, or they might learn biology principles by creating a video to teach others. In either instance the technology may also enable the student to interact with other learners or additional content during the creation process, but the activity can be creative without such interaction. In creative learning activities, students may directly drive the learning as they produce artifacts (giving form to their own conceptual constructs) and iteratively solve problems by applying the technology to refine their content understanding.

Figure 3. The creative level of student learning in PIC.

Figure 3. The creative level of student learning in PIC. Across these three levels, similar technologies might be used to provide different learning experiences for students. For instance, electronic slideshow software like PowerPoint might be used by a teacher alternatively (P) to provide lecture notes about the solar system, (I) to offer a trò chơi about planets, or (C) to provide a platform for creating an interactive kiosk to teach other students about solar radiation. Across these three applications, the same technology is used to teach the same content, but the activity engaging the student through the technology differs, and the student’s role in the learning experience influences what is learned, what is retained, and how it can be applied to other situations.

This focus on student behaviors through the technology avoids technocentrist thinking (ascribing educational value to the technology itself) and forces teachers to consider how their students are using the tools provided to them. All three levels of PIC might be appropriate for different learning goals and contexts.

RAT: Replacement, Amplification, Transformation

To address the question of how technology use impacts teacher pedagogy, we adopted the RAT model proposed by Hughes et al. (2006), which has similarities to the enabling, enhancing, and transforming model proposed by our second author (Graham & Robison, 2007). Though the theoretical underpinnings of RAT have not been explored in the literature outside of the authors’ initial conference proceeding, we have applied it in previous studies (a) to organize understanding of how teachers think about technology integration (Amador, Kimmons, Miller, Desjardins, & Hall, 2015; Kimmons, Miller, Amador, Desjardins, & Hall, 2015), (b) to compare models for evaluation (Kimmons & Hall, 2022), and (c) to illustrate particular model strengths (Kimmons, 2015; Kimmons & Hall, 2016b).

Like PIC, the acronym RAT identifies three potential responses to a target question: In any educational context technology may have one of three effects on a teacher’s pedagogical practice: replacement, amplification, or transformation.

Our experience has shown that teachers who are beginning to use technology to support their teaching tend to use it to replace previous practice, such as digital flashcards for paper flashcards, electronic slides for an overhead projector, or an interactive whiteboard for a chalkboard. That is, they transfer an existing pedagogical practice into a newer medium with no functional improvement to their practice.

Similar replacements may be found in other models: substitution in SAMR or entry in TIM. This level of use is not necessarily poor practice (e.g., digital flashcards can work well in place of paper flashcards), but it demonstrates that (a) technology is not being used to improve practice or address persistent problems and (b) no justifiable advantage to student learning outcomes is achieved from using the technology. If teachers and administrators seek funding to support their technology initiatives for use that remains the replacement level, funding agencies would (correctly) find little reason to invest limited school funds and teacher time into new technologies.

The second level of RAT, amplification, represents teachers’ use of technology to improve learning practices or outcomes. Examples include using review features of Google Docs for students to provide each other more efficient and focused feedback on essays or using digital probes to collect data for analysis in LoggerPro, thereby improving data management and manipulation.

Using technology in these amplification scenarios incrementally improves teachers’ practice but does not radically change their pedagogy. Amplification improves upon or refines existing practices, but it may reach undesirable limits insofar as it may not allow teachers to fundamentally rethink and transform their practices.

The transformation level of RAT uses technology to enable, not merely strengthen, the pedagogical practices enacted. Taking away the technology would eliminate that pedagogical strategy, as technology’s affordances create the opportunity for the pedagogy and intertwines with it (Kozma, 1991). For example, students might gather information about their local communities through GPS searches on mobile devices, analyze seismographic data using an online simulation, or interview a paleontological expert a remote university using a Web video conferencing service such as Zoom (https://zoom.us). None of these experiences could have occurred via alternative, lower tech means.

Of all the processes affected by PICRAT, transformation is likely the most problematic, because it reflects a longstanding debate on whether technology can ever have a transformative effect on learning (e.g., Clark, 1994). Various journal articles and books have tackled this issue, and this article cannot do justice to the debate. Many researchers and practitioners have noted that transformative uses of technology for learning may only refer to functional improvements on existing practices or greater efficiency. A tipping point exists, however, where greater efficiency becomes so drastic that new practices can no longer be distinguished from old in terms of efficiencies alone.

Consider the creation of the incandescent light bulb. Previously, domestic and industrial light had been provided primarily by candles and lamps, a high-cost source of low-level light, meaning that economic and social activities changed decisively when the sun set. Arguably the incandescent light bulb was a more efficient version of a candle, but the improvements in efficiency were sufficiently drastic to have a transformative effect on society: increasing the work day of laborers, the manufacturing potential of industry, and the social interaction of the public. Though functionally equivalent to the candle, the light bulb’s efficiencies had a transformative effect on candlelit lives. Similarly, uses of technology that transform pedagogy should be viewed differently than those that merely improve efficiencies, even if the transformation results from functional improvement.

To help teachers classify their practices according to RAT, we ask them a series of operationalized evaluation questions (Figure 4), modified from a previous study (Kimmons et al., 2015). Using these questions, teachers must first determine if the use is merely replacement or if it improves student learning. If the use brings improvement, they must determine whether it could be accomplished via lower tech means, making it amplification; if it could not, then it would be transformation.

Figure 4. Flowchart for determining whether a

classroom use of technology is Replacement, Amplification, or Transformation.

Figure 4. Flowchart for determining whether a

classroom use of technology is Replacement, Amplification, or Transformation. PICRAT Matrix

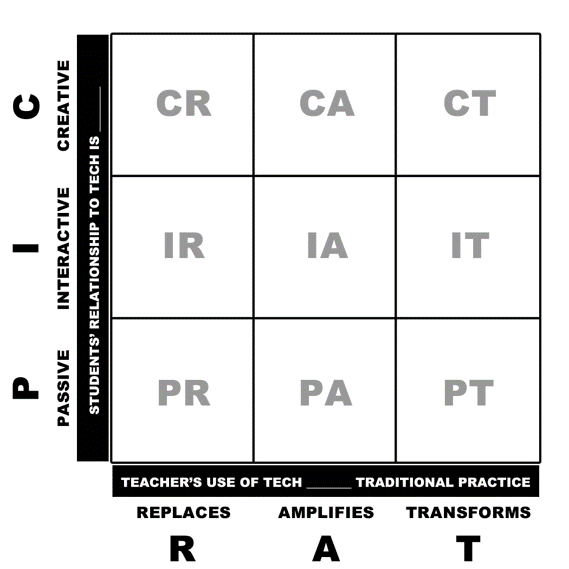

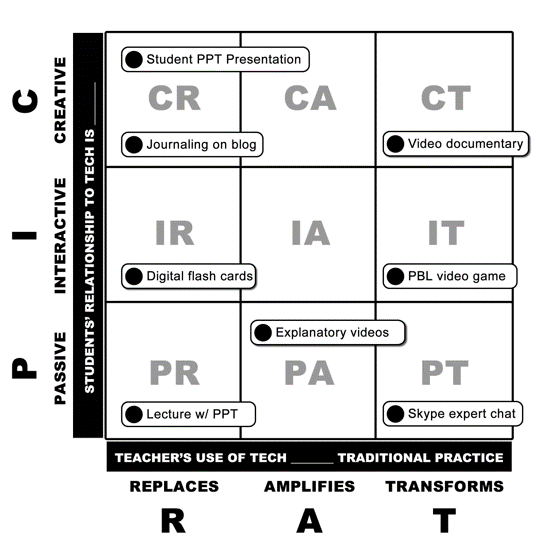

With the three answer levels for each question, we construct a matrix showing nine possibilities for a student teacher to evaluate any technology integration scenario. Using PIC as the y-axis and RAT as the x-axis, the hierarchical matrix (progressing from bottom-left to top-right), which we designate as PICRAT, attempts to fulfill Kuhn’s (2013) call that theoretical models provide suggestions for new and fruitful actions (Figure 5). With this matrix, a teacher can ask the two guiding questions of any technology use and place each lesson plan, activity, or instructional practice into one of the nine cells.

Figure 5. The PICRAT matrix.

Figure 5. The PICRAT matrix.

In our experience, most teachers beginning to integrate technology tend to adopt uses closer to the bottom left (i.e., passive replacement). Therefore, we use this matrix (a) to encourage them to critically consider their own and other practices they encounter and (b) to give them a suggested path for considering in moving their practices toward better practices closer to the top right (i.e., creative transformation).

We use this matrix only the activity level, not the teacher or course level. Unlike certain previous models that claim to classify an individual’s or a classroom’s overall technology use (e.g., SAMR, TIM), this model recognizes that teachers need to use a variety of technologies to be effective, and use should include activities that span the entire matrix. For instance, Figure 6 provides an example of how teachers might map all of their potential technology activities for a specific unit.

Figure 6. An example of unit activities mapped to PICRAT.

Figure 6. An example of unit activities mapped to PICRAT. Using the matrix we would encourage the teacher to think about how lower level uses (e.g., digital flashcards or lecturing with an electronic slideshow) could be shifted to higher level uses (e.g., problem-based learning video games or Skype video chats with experts). RAT depends on the teacher’s pretechnology practices: Previous teaching context and practices dictate the results of RAT evaluation.

As our teachers engage in PICRAT mapping, we encourage reflecting on their practices and on new strategies and approaches the PICRAT model can suggest. We have also created an animated instructional video to introduce the PICRAT model and to orient teachers to this way of thinking (Video 1).

[embed]https://www.youtube.com/watch?v=bfvuG620Bto[/embed]

Video 1. PICRAT for Effective Technology Integration in Teaching (https://youtu.be/bfvuG620Bto)

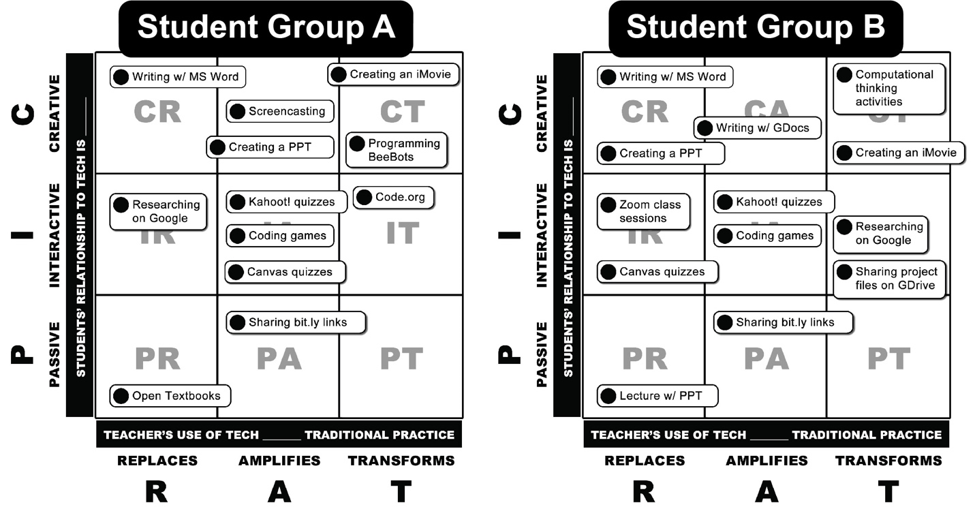

Figure 7 provides an example of how we have used PICRAT to analyze our teacher education courses. Using Google Drawings (https://docs.google.com/drawings/) for collaboration, we have students in each section of the same technology integration course map all of the uses of technology that they have used in their course sequence. As is visible from the figure, students sometimes disagree with one another on how particular technologies and activities should be mapped (e.g., PowerPoint as PR, CR, or CA), but this exercise yields valuable conversations about the nature of activities being undertaken with these technologies, what makes different uses of the same technologies of differential value, and so forth.

Figure 7. An example of categorizing course activities with PICRAT.

Figure 7. An example of categorizing course activities with PICRAT. Similarly, when students complete technology integration assignments, such as creating a technology-infused lesson plan, we convert PICRAT to a rubric for evaluating their products, evaluating lower level uses (e.g., PR) the basic passing level and higher level uses (e.g., CT) proficient or distinguished levels. This approach helps students understand that technology can be used in a variety of ways and that, though all levels may be useful, some are better than others.

Benefits of PICRAT

In this paper, we have presented the theoretical rationale for the PICRAT model but acknowledge that we cannot definitively claim any of its benefits or negatives until research on the model is completed. Research validating a model typically comes (if it comes all) after the initial model has been published. Following are the benefits of this model and reasons future research should investigate its utility.

Though the PICRAT model is not perfect or completely comprehensive, it has several benefits over competing models for teacher education with regard to the criteria in Table 3. Our institution has structured all three courses in its technology integration sequence around PICRAT, and students have found the model easy to understand and helpful for conceptualizing technology integration strategies. We generally introduce the model by taking about 5 minutes of class time to present the guiding questions and levels, then ask students to place on the matrix specific activities and technology practices they might have encountered in classrooms. We subsequently use the model as a conceptual frame for course rubrics, assigning grades based on the position of the student’s performance on the model.

One item of feedback that we consistently receive about the model is praise for its clarity. The two questions and nine cells are relatively easy to remember, to understand, and to apply in any situation. In addition to clarity, the model’s scope effectively balances a sufficiently comprehensive range of practices to make it practically useful for classroom teachers and provides a common, usable vocabulary for talking about the nature of the integration.

The major concern with any clear model is that it may oversimplify important aspects of technology integration and ignore important nuances. However, teachers are using PICRAT to interrogate their practice with a suitable balance between directive simplicity and nuanced complexity, with opportunities for both directive guidance and self-reflective critical thinking.

Furthermore, the PICRAT model is highly compatible with other quality educational practices, because it emphasizes technology as supporting strong pedagogy. PICRAT promotes innovative teaching and continually evolving pedagogy, progressing toward transformative practices. The model’s student focus (via PIC) emphasizes student engagement and active/creative learning, naturally encouraging teacher practices that use technology to put students in charge of their own learning, never treating technology as more than a means for achieving this end.

Perhaps the strongest benefit we have found is how PICRAT should meet the fruitfulness criterion by encouraging meaningful conversations and self-talk around teachers’ technology use (Wentworth et al., 2008). Although each square in the matrix is a positive technology application, our hierarchical view of the levels guides teachers to practices that move toward the upper-right corner: the focus on creative learning that transforms teacher practices. These explicit cells in the matrix effectively initiate teacher self-talk and discussions about technology use. For instance, we might ask ourselves how a new technology could be used to amplify interactive learning or support transformative creative learning. As we do so, each square prompts deep reflection about potential teacher practices and shifts emphasis away from the technology itself.

Table 3

Theoretical Evaluation of PICRAT According to Six Criteria of Good Theory

Limitations or Difficulties of PICRAT

At least five difficulties should be considered by teacher educators interested in this model. Some are inherited from RAT; others are unique to PICRAT. The following are noted: (a) confusion regarding creative use, (b) confusion regarding transformative practice, (c) applicability to other educational contexts, (d) evaluations beyond activity level, and (e) disconnects with student outcomes. Following is an explanation of each challenge and guidance on addressing it.

First, the term creative can be confusing for student teachers if not carefully explained, as it might imply that the best technology use is artistic or expressive. In PICRAT, creative is operationalized as artifact creation, generation, or construction. Created artifacts may not be artistic, and not all forms of artistic expression produce worthwhile artifacts. We carefully teach our student teachers that creative is not the same as artistic, but rather that their students should be using technology as a generative or constructive tool for knowledge artifacts.

Second, transformative practice can seem problematic for teachers, a difficulty shared with RAT, mentioned in the Clark–Kozma debate, because such an identification may be subjective and contextual. We have sought to operationalize transformationby providing decision processes or guiding questions to help distinguish amplification from transformation. Doing so does not completely resolve this issue, because transformation is contentious in the literature, but it does provide a process for evaluating teachers’ technology use.

We consider accurately differentiating amplification from transformation in every case to be less important than engaging in self-reflection that considers effects of various instances of technology integration on a teacher’s practice. In grading our students’ work, then, we ask them to provide rationales for labeling technology uses as transformative versus amplifying, allowing us to see their tacit reasoning and, thereby, perceiving misconceptions or growth.

Third, the intended scope of PICRAT has been carefully limited in this article to teacher preparation; our claims should be understood within that context. PICRAT may be applicable to other contexts (e.g., program evaluation or educational administration), but such applications should be considered separately from arguments made for our specific context.

Fourth, full scaling up of PICRAT to unit-, course-, or teacher-level evaluations has not been completed; related problems are apparent even the lesson plan level. A teacher might plan a lesson using technology in a minor but transformative way (e.g., a 5-minute activity for an anticipatory set), and then use technology as replacement as the lesson continues. Should this lesson plan be evaluated as transformative, as replacement, or as something more nuanced?

Our response is that the evaluation depends on the goal of the evaluator. We typically try to push our teachers to think in transformative ways and to entwine technology throughout an entire lesson, making our level of analysis the lesson plan. Thus, we would likely view short, disjointed, or one-off activities as inappropriate for PICRAT evaluation, focusing instead on the overall tenor of the lesson. Those seeking to use PICRAT for various levels of analysis, however, may need to consider this issue the appropriate item level.

Finally, the student role in PICRAT focuses on relationships of student activities to the technologies that enable them. It does not explicitly guide teachers to connect technology integration practices to measurable student outcomes. All models described (with the possible exception of TIP) seem to suffer from limitations of this sort, and though the higher order principles illustrated in PICRAT should theoretically lead to better learning, evidence for such learning depends on content, context, and evaluation measures.

PICRAT itself is built on nontechnocentrist assumptions about learning, treating technology as an “opportunity offered us … to rethink what learning is all about” (Papert, 1990, para. 5). For this reason, teacher educators should help student teachers to recognize that using PICRAT only as a guide may not ensure drastic improvements in measurable student outcomes but may, rather, create situations in which deeper learning can occur as technology can be used as a tool for rethinking some of the persistent problems of teaching.

Conclusion

We first explored the roles of theoretical models in educational technology, placing particular emphasis upon teacher preparation surrounding technology integration. We then offered several guidelines for evaluating existing theoretical models in this area and offered the PICRAT model as an emergent answer to the needs of our teacher preparation context and the limitations of prior models in addressing those needs.

PICRAT balances comprehensiveness and parsimony to provide teachers a conceptual tool that is clear, fruitful, and compatible with existing practices and expectations, while avoiding technocentrist thinking. Although we identified four limitations or difficulties with the PICRAT model, we emphasize its strengths as a teaching and self-reflection tool that teacher educators can use in training teachers to integrate technology

effectively despite the constantly changing, politically influenced, and intensely contextual nature of this challenge. Future work should include employing the PICRAT model in various practices and settings, while studying how effectively it can guide teacher practices, reflection, and pedagogical change.

References

Amador, J., Kimmons, R., Miller, B., Desjardins, C. D., & Hall, C. (2015). Preparing preservice teachers to become self-reflective of their technology integration practices. In M. L. Niess & H. Gillow-Wiles (Eds.), Handbook of research on teacher education in the digital age (pp. 81-107). Hershey, PA: IGI Global.

Anderson, L. W., Krathwohl, D. R., & Bloom, B. S. (2001). A taxonomy for learning, teaching, and assessing: A revision of Bloom’s taxonomy of educational objectives. Tp New York, NY: Longman.

Archambault, L., & Barnett, J. (2010). Revisiting technological pedagogical content knowledge: Exploring the TPACK framework. Computers & Education, 55, 1656–1662. https://doi.org/10.1016/j.compedu.2010.07.009

Archambault, L., & Crippen, K. (2009). Examining TPACK among K–12 online distance educators in the United States. Contemporary Issues in Technology and Teacher Education, 9(1). Retrieved from https://citejournal.org/volume-9/issue-1-09/general/examining-tpack-among-k-12-online-distance-educators-in-the-united-states

Brantley-Dias, L., & Ertmer, P. A. (2013). Goldilocks and TPACK: Is the construct “just right?” Journal of Research on Technology in Education, 46, 103-128. https://doi.org/10.1080/15391523.2013.10782615

Burkhardt, H., & Schoenfeld, A. H. (2003). Improving educational research: Toward a more useful, more influential, and better-funded enterprise. Educational Researcher, 32(9), 3–14. https://doi.org/10.3102/0013189X032009003

Clark, R. E. (1994). Media will never influence learning. Educational Technology Research and Development, 42(2), 21–29. https://doi.org/10.1007/BF02299088

Cuban, L. (1986). Teachers and machines: The classroom use of technology since 1920. Tp New York, NY: Teachers College Press.

Dubin, R. (1978) Theory building (Rev. ed.). Tp New York, NY: Free Press.

Feyerabend, P. K. (1975). Against method: Outline of an anarchistic theory of knowledge. London, England: New Left Books.

Gibbons, A. S., & Bunderson, C. V. (2005). Explore, explain, design. In K. Kempf-Leonard (Ed.), Encyclopedia of social measurement (pp. 927–938). Amsterdam, NE: Elsevier. https://doi.org/10.1016/B0-12-369398-5/00017-7

Graham, C. R. (2011). Theoretical considerations for understanding technological pedagogical content knowledge (TPACK). Computers & Education, 57(3), 1953-1960.

Graham, C. R., Henrie, C. R., & Gibbons, A. S. (2014). Developing models and theory for blended learning research. In A. G. Picciano, C. D. Dziuban, & C. R. Graham (Eds.), Blended learning: Research perspectives (Vol. 2; pp. 13-33). Tp New York, NY: Routledge.

Graham, C. R., & Robison, R. (2007). Realizing the transformational potential of blended learning: Comparing cases of transforming blends and enhancing blends in higher education. In A. G. Picciano & C. D. Dziuban (Eds.), Blended learning: Research perspectives (pp. 83-110). Retrieved from https://onlinelearningconsortium.org/book/blended-learning-research-perspectives

Harmes, J. C., Welsh, J. L., & Winkelman, R. J. (2022). A framework for defining and evaluating technology integration in the instruction of real-world skills. In Y. Rosen, S. Ferrara, & M. Mosharraf (Eds.), Handbook of research on technology tools for real-world skill development (pp. 137–162). CITY, STATE: PUBLISHER. https://doi.org/10.4018/978-1-4666-9441-5.ch006

Hughes, J., Thomas, R., & Scharber, C. (2006). Assessing technology integration: The RAT – Replacement, Amplification, and Transformation – framework. In Proceedings of SITE 2006: Society for Information Technology & Teacher Education International Conference (pp. 1616–1620). Chesapeake, VA: Association for the Advancement of Computing in Education.

Kennewell, S., Tanner, H., Jones, S., & Beauchamp, G. (2008). Analysing the use of interactive technology to implement interactive teaching. Journal of Computer Assisted Learning, 24, 61–73. https://doi.org/10.1111/j.1365-2729.2007.00244.x

Kimmons, R. (2015). Examining TPACK’s theoretical future. Journal of Technology and Teacher Education, 23(1), 53-77.

Kimmons, R., & Hall, C. (2016a). Emerging technology integration models. In G. Veletsianos (Ed.), Emergence and innovation in digital learning: Foundations and applications (pp. 51-64). Edmonton, AB: Athabasca University Press.

Kimmons, R., & Hall, C. (2016b). Toward a broader understanding of teacher technology integration beliefs and values. Journal of Technology and Teacher Education, 24(3), 309-335.

Kimmons, R., & Hall, C. (2022). How useful are our models? Pre-service and practicing teacher evaluations of technology integration models. TechTrends, 62, 29-36. doi:10.1007/s11528-017-0227-8

Kimmons, R., & Johnstun, K. (2022). Navigating paradigms in educational technology. TechTrends, 63(5), 631-641. doi:10.1007/s11528-019-00407-0

Kimmons, R., Miller, B., Amador, J., Desjardins, C., & Hall, C. (2015). Technology integration coursework and finding meaning in pre-service teachers’ reflective practice. Educational Technology Research and Development, 63(6), 809-829.

Kimmons, R., & West, R. (n.d.). PICRAT for effective technology integration in teaching. YouTube. Retrieved from https://www.youtube.com/watch?v=bfvuG620Bto

Koehler, M. J., & Mishra, P. (2009). What is technological pedagogical content knowledge? Contemporary Issues in Technology and Teacher Education, 9(1). Retrieved from https://citejournal.org/volume-9/issue-1-09/general/what-is-technological-pedagogicalcontent-knowledge

Kozma, R. B. (1991). Learning with truyền thông. Review of Educational Research, 61, 179–211. https://doi.org/10.3102/00346543061002179

Kuhn, T. S. (1996). The structure of scientific revolutions (3rd ed.). Chicago, IL: The University of Chicago Press.

Kuhn, T. S. (2013). Objectivity, value judgment, and theory choice. In A. Bird & J. Ladyman (Eds.), Arguing about science (pp. 74–86). Tp New York, NY: Routledge.

Mishra, P., & Koehler, M. J. (2007). Technological pedagogical content knowledge (TPCK): Confronting the wicked problems of teaching with technology. In R. Carlsen, K. McFerrin, J. Price, R. Weber, & D. A. Willis (Eds.), Proceedings of SITE 2007: Society for Information Technology & Teacher Education International Conference (pp. 2214–2226). Chesapeake, VA: Association for the Advancement of Computing in Education.

Moersch, C. (1995). Levels of technology implementation (LoTi): A framework for measuring classroom technology use. Learning and Leading With Technology, 23, 40–42.

Papert, S. (1987). Computer criticism vs. technocentric thinking. Educational Researcher, 16(1), 22–30. https://doi.org/10.3102/0013189X016001022

Papert, S. (1990). A critique of technocentrism in thinking about the school of the future. Retrieved from ://www.papert.org/articles/ACritiqueofTechnocentrism.html

Papert, S., & Harel, I. (1991). Situating constructionism. Constructionism, 36(2), 1–11.

Popper, K. (1959). The logic of scientific discovery. London, UK: Routledge.

Puentedura, R. R. (2003). A matrix model for designing and assessing network-enhanced courses. Hippasus. Retrieved from ://www.hippasus.com/resources/matrixmodel/

Roblyer, M. D., & Doering, A. H. (2013). Integrating educational technology into teaching (6th ed.). Boston, MA: Pearson.

Roblyer, M. D., & Hughes, J. E. (2022). Integrating educational technology into teaching (8th ed.). Tp New York, NY: Pearson.

Selwyn, N. (2010). Looking beyond learning: Notes towards the critical study of educational technology. Journal of Computer Assisted Learning, 26, 65–73. https://doi.org/10.1111/j.1365-2729.2009.00338.x

Venkatesh, V., Morris, M. G., Davis, G. B., & Davis, F. D. (2003). User acceptance of information technology: Toward a unified view. MIS Quarterly, 27, 425–478. https://doi.org/10.2307/30036540

Wentworth, N., Graham, C. R., & Monroe, E. E. (2009). TPACK development in a teacher education program. In L. T. W. Hin & R. Subramaniam (Eds.), Handbook of research on new truyền thông literacy the K-12 level: Issues and challenges (Vol. II, pp. 823–838). Hershey, PA: IGI Global.

Wentworth, N., Graham, C. R., & Tripp, T. (2008). Development of teaching and technology integration: Focus on pedagogy. Computers in the Schools, 25(1/2), 64–80. doi:10.1080/07380560802157782

Whetten, D. A. (1989). What constitutes a theoretical contribution? Academy of Management Review, 14, 490–495. https://doi.org/10.5465/amr.1989.4308371

Willingham, D. T. (2012). When can you trust the experts? How to tell good science from bad in education. San Francisco, CA: Jossey-Bass.

5,344 total views, 1 views today

What is the framework for technology integration?

This framework is called TPACK or Technological, Pedagogical, and Content Knowledge. It takes these three main forms and explores how they interact with one another. True technology integration occurs when these three areas work together to create a dynamic learning experience for all students.Why must teachers be equipped with Tpack in teaching social studies?

As previously noted, TPACK is essential to enabling teachers to implement ICT in their teaching, as it enables teachers to select and use hardware and software, identify the affordances (or lack thereof) of specific features and use the tools in pedagogically appropriate and effective ways.In what ways can using graphing calculators aid students in understanding mathematical concepts?

In what way can using graphing calculators aid students in understanding mathematical concepts? They allow students to more easily organize and analyze data.What is the most significant benefit of instituting distance education within a school district quizlet?

What is the most significant benefit of instituting distance education within a school district? It provides a way to provide educational equity between rural and poorer students and those who come from larger, more affluent districts.

Post a Comment